5 Ways to Prepare Your Data Center for AI Workloads

%20Prepare%20Your%20Data%20Center%20for%20AI%20Workloads.png)

2023 is the year of artificial intelligence (AI). While AI has been in the works for decades, it’s going mainstream with the introduction of generative AI chatbots like ChatGPT, Microsoft’s Bing, and Google’s Bard. AI is already being used in many industries, with applications utilizing data for learning and analytics.

AI wouldn’t be AI without data. And that’s where data centers come in, providing the storage for data and the processing power for the AI models to work on that data. Embracing AI has become a necessity for enterprises running data centers.

But AI workloads aren’t like the usual applications. AI applications require higher processor density, which, in turn, requires more power. Data centers must brace for the bigger power draw of such applications and utilize technologies and protocols to maximize efficiency.

This article lists some ways data centers can better prepare for AI workloads.

The AI Revolution Is Here

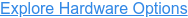

You need only look at the statistics to get a realistic picture of AI penetration. The AI market is expected to grow at a compound annual growth rate of 23% from 2023 to 2028. It has been growing exponentially for the past few years. Particularly, generative AI is poised to cross $100 billion in 2032.

(Data: source)

35% of organizations are using AI in some capacity, according to IBM Global AI Adoption Index 2022.

While there’s some skepticism about AI’s economic impact, most analysts believe AI would add to the global economy. AI will help global GDP grow by 1.5%, adding $13 trillion in economic output, as estimated by McKinsey.

The recent hype around AI-powered chatbots is only the beginning of what’s to come. Big tech corporations are putting their weight behind AI, investing millions to develop sophisticated generative AI that’s better than predictive AI.

Businesses and individuals need to adapt and do it fast.

5 Ways Data Centers Can Prepare to Handle AI Applications

AI is changing how businesses run for the better, but it’s not without its challenges. AI applications are power-hungry, requiring adequate power and processing to run. A data center running on run-of-the-mill servers may be unable to support these applications.

So what can be done to a data center to make its infrastructure handle AI workloads efficiently? Here are five ways:

Segment AI Handling

Your data center will handle AI applications but will continue supporting other applications with less computing. While you should prepare for higher energy consumption, it doesn’t need to be the case for the entire data center. In other words, creating a contained segment within the data center for handling AI workloads is better.

Segmentation based on computing and power consumption can save setup costs and also reduce energy wastage. The segments that support AI applications should be provisioned for more power draw and supplied with processor-dense servers.

The remaining data center segments can handle other workloads using less power.

Of course, this is easier said than done. To implement this type of segmentation, you should analyze your energy supply and consumption. If the facility requires more power, make arrangements, and ensure that the AI segment gets the power it needs.

The energy needs may vary significantly, so bringing in a professional and assessing your specific data centers is best.

Refresh to Next-Generation Servers

Manufacturers are consistently improving server designs, addressing both performance and efficiency needs. The next-generation servers are better suited to handling high-compute processes like those of deep learning. One way to ensure your data center is prepared for a future full of AI is to refresh to the latest servers.

Buying new servers is a big investment that will set you back anywhere from tens of thousands to perhaps millions of dollars. However, it’s important to catch up and prepare your infrastructure for the latest trends in technology, except AI isn’t even a trend; it’s staying for good.

Whether you should invest in new servers depends on how old your existing servers are, what they are capable of, and whether you have the budget to upgrade.

Take a step back and analyze your existing servers, make a timeline of their remaining life, and assess the processing needs by analyzing historical data. Think about your future plans. What kind of applications do you want to target? Can your existing servers support them?

If your servers are only a couple of years old, you’re likely better off waiting another few years to refresh.

Use Lower Resolution Workloads for AI

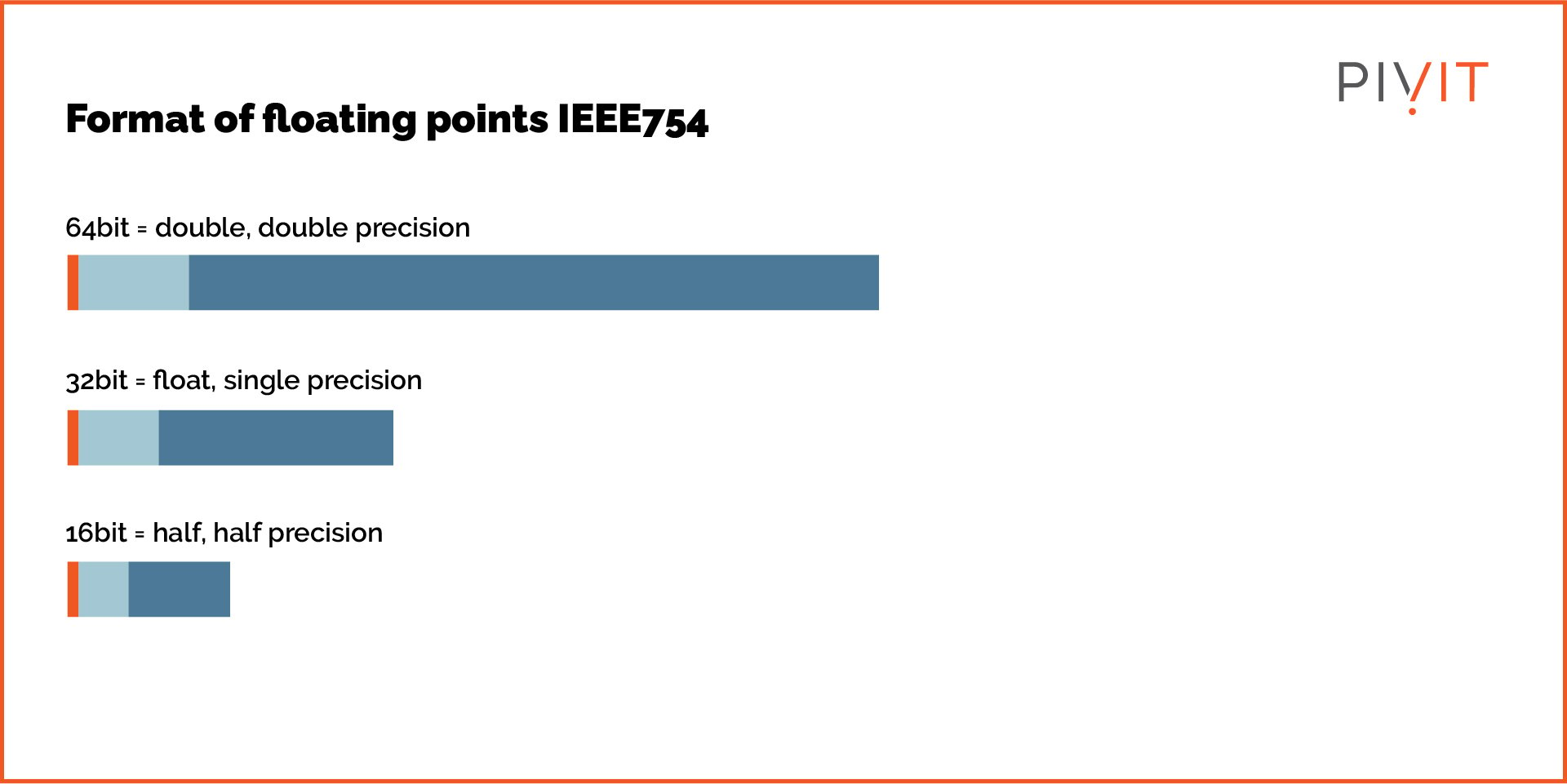

AI workloads, although compute-intensive, can easily run on half-precision (FP16). In comparison, scientific research may need double-precision floating point (64-bit) calculations.

Unless you’re running a supercomputer for scientific research, you can easily support AI applications with a lower resolution workload (i.e., quarter or half precision).

Using these lower-resolution workloads allows you to utilize existing servers to support AI. Single and half-precision calculations are commonly used in deep neural networks.

GPU and modern AI chips are gaining traction as optimal components for running AI workloads, but the good old CPU also plays a role. CPUs can support certain AI algorithms just as well as GPUs.

Invest in Liquid Cooling

AI applications can require much more power than the 7 kW consumption of a typical rack. All that extra power consumption generates so much heat that air cooling isn’t feasible. Liquid, specifically water, has much more heat capacity than air.

Liquid cooling has been making waves in the data center sector, as it’s much more efficient at cooling than air. However, with AI workloads, it may just become a necessity.

Liquid cooling can even exist with the traditional cool and hot aisle setup of data centers. The only difference is that instead of fans, pipes with water are connected to heat sinks that transport all the heat generated by the servers.

So while you prepare your infrastructure to handle higher-density workloads of AI, you should also consider how you plan to address the heat generated. Introducing liquid cooling to your existing data center may be costly initially, but its long-term impact will create measurable efficiency and improve performance.

Consider Edge Computing

One way to better process AI use cases is to move some of the processing power near where the data is being generated. In other words, edge computing is the answer to decreasing all the energy consumption caused by moving data.

Data movement across the network is essentially power moving across the infrastructure. Energy consumption can be reduced by eliminating the need for data to travel or reducing its journey distance. Since AI requires high volumes of data, edge computing helps handle all the processing nearer to the source.

Establishing an edge data center isn’t just great for saving energy and markedly improves response rates. Processed data is readily available to the user and doesn’t have to travel long distances, saving time and energy.

Consider PivIT for Next-Gen Hardware Needs!

A future-proof data center is AI-ready, and it’s high time data centers embraced the revolution. Handling AI workloads requires reliable infrastructure with higher computing power and energy efficiency. Investing in next-generation servers and network equipment is a step in the right direction for data centers catering to AI applications.

PivIT can help you procure the latest servers from major OEMs to keep your data center fresh and prepared for whatever AI applications throw at it. With savings as high as 60%, consider refreshing your servers and other equipment with the help of PivIT’s specialists.

Learn more about PivIT’s hardware procurement and what it can do for your data center!