How NVIDIA AI Enterprise Wants to Change Enterprise Data Centers

%20How%20NVIDIA%20Is%20Gradually%20Dominating.png)

When it comes to enterprise networking and data centers, we often think of juggernauts like Cisco, Dell, HPE, and Intel. But another tech company with its chips and platforms may soon become integral to data centers worldwide. NVIDIA, a leading GPU maker, is expanding its influence in the enterprise tier through its NVIDIA AI enterprise platform.

The company’s foray into enterprise hardware and software isn’t new. Years ago, it announced that it planned to gradually shift its focus toward data centers.

But the plan was quickly realized this year, following the generative AI fever. NVIDIA has been attempting to seize the moment with product launches, strategic partnerships, and potentially profitable acquisitions.

Hyperscalers and enterprise data centers are preparing for an overhaul of infrastructure to support AI applications. NVIDIA is at the forefront of technology that will enable enterprises to bring their data centers up to speed for AI workloads.

In this article, we will discuss the following:

- How NVIDIA is growing in the enterprise data center market.

- How its various products are contributing to its goal.

- How it can potentially help transform your enterprise.

Not the article you were looking for today? Try these out:

- Here’s How Generative AI Can Improve Data Center Management

- Want to Consolidate Your Data Centers? Here Are the Pros and Cons

- How Hardware Fits Into an Increasingly Software-Defined IT Space

NVIDIA Leading the Charge With AI

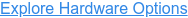

It’s safe to say that NVIDIA was positioned to take on the AI market as a GPU maker. The large language models (LLMs), such as GPT, that power the ubiquitous chatbot ChatGPT require massive computing power. GPUs are, by design, better at meeting those computational requirements as they can process multiple computations simultaneously.

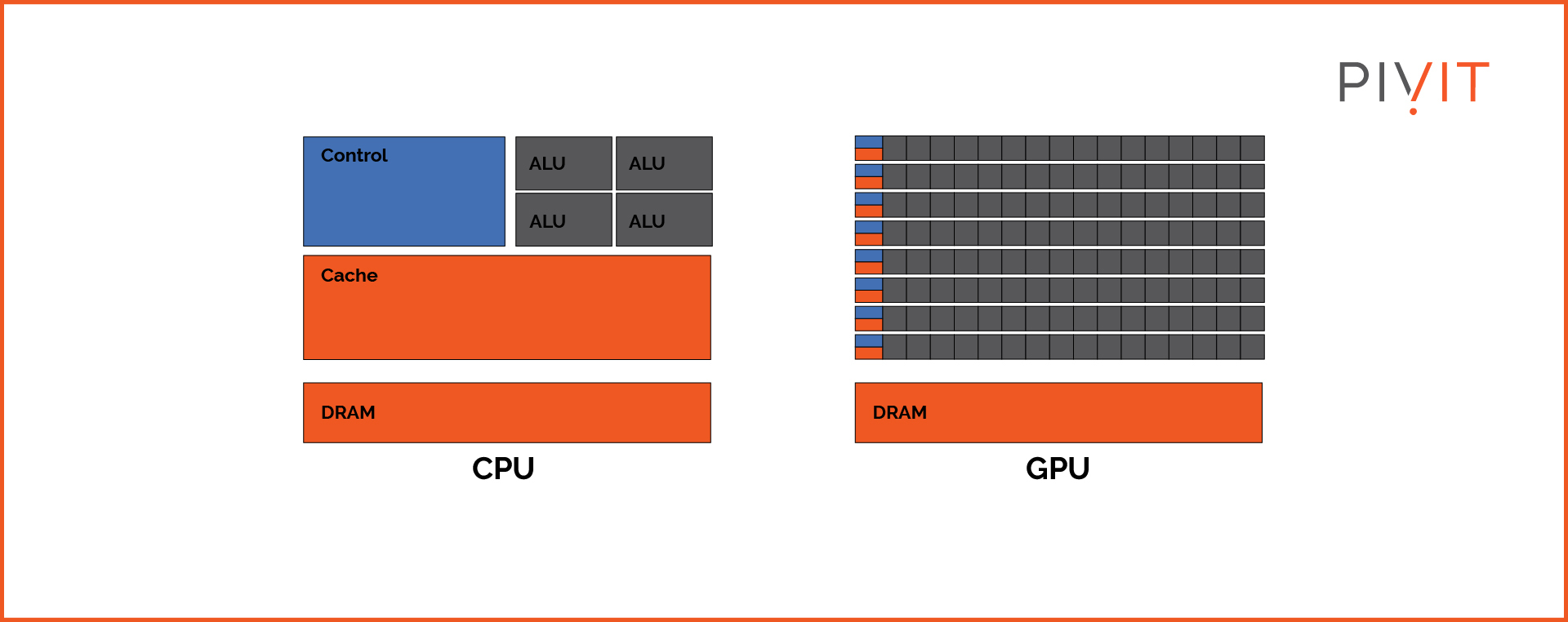

(Data: source)

NVIDIA, which had over 17 percent of GPU market share as of 2022, was behind some of the latest innovations in AI. Thousands of NVIDIA GPUs powered the GPT language model developed by OpenAI in collaboration with Microsoft.

The company’s GPU dominated the PC gaming market. However, with the explosion of generative AI, the use cases of its GPUs have transformed, with hyperscalers and enterprises working on AI projects opting for their latest offerings.

However, NVIDIA had been investing in AI long before the popularity of ChatGPT. Its researchers and engineers have been working on AI accelerators.

More importantly, the company wasn’t just betting on GPUs but also developing software platforms to enable AI applications on an enterprise level (for example, the NVIDIA AI enterprise platform and CUDA toolkit).

Today, it’s offering what it claims as the world’s most advanced AI platform for a wide range of use cases, such as model training, cybersecurity, and data analytics.

Other IT vendors eyeing the growing market for AI-ready hardware are also tapping NVIDIA’s GPUs for their solutions. New innovative products use the company’s powerful GPUs to process compute-intense workloads from servers to storage.

NVIDIA has gone from a GPU manufacturer to an AI supercomputing company, not just because of its primary product but also the investments it has made over the years.

A Growing Ecosystem With Acquisitions and Partnerships

One of the reasons why NVIDIA is so far ahead in AI innovation in hardware and software is that it’s not doing everything independently. It has strategically acquired companies working on AI-related technologies and solving specific problems around them. Similarly, it has formed partnerships with some of the biggest names in the industry to offer AI solutions to enterprises.

In other words, the company is not taking a competitive route but a collaborative approach for now. This has created a whole ecosystem of products and solutions catering to enterprise data centers looking to adapt to AI.

Here are some of the most notable collaborations:

- NVIDIA has partnered with Dell to offer enterprises on-premise generative AI solutions. This offering combines NVIDIA’s GPUs and NeMo LLM framework with Dell’s servers and storage, all shipped with pre-configurations suited to enterprise needs.

- It has partnered with VMware to introduce the VMware Private AI Foundation, a fully integrated generative AI platform that can be used on private cloud and on-premise.

- Its DGX cloud, a robust hardware and software package capable of running AI models, runs on Oracle cloud.

- The company is building AI Lighthouse in collaboration with ServiceNow and Accenture to enable companies to build their own LLMs and AI applications.

Numbers Don’t Lie

NVIDIA’s earnings in 2023 are further evidence of its success with AI, particularly in the enterprise data center sector. In the second quarter of 2023, the company reported a revenue of $13.5 billion, a 101 percent increase from the same quarter last year and an 88 percent increase from the previous quarter.

Notably, over $10 billion of this revenue came from data centers, proving how the company’s various products, particularly GPUs, are making inroads in the hyperscalers and enterprise data center market.

Furthermore, its revenue and net income are expected to rise further, making it one of the most valuable tech companies in the world in the near future. Its revenue may even pass Cisco in the next few quarters, effectively making it a dominant player in the enterprise networking market.

These massive gains in revenue come off the back of its GPU sales, for which it has been charging premium pricing. It shows enterprises are willing to pay top dollar for NVIDIA’s GPUs that enable high-performance computing.

How Data Centers Could Benefit

The innovations made by NVIDIA also present unique opportunities for data centers and any large enterprise that runs its business on its own equipment. Hardware equipped with NVIDIA’s GPU has already become highly sought-after for companies looking to cash in on the AI frenzy and develop their own applications on-site.

Of course, such equipment will come at a hefty price tag, amplifying capital expenditure (CapEx) when many companies are slicing IT budgets and delaying refresh cycles. However, eventually, enterprises that want to take advantage of AI technology, either for their own use or for their customers, will have to invest in hardware that can provide the computing power suitable for AI workloads.

(Data: source)

Data centers that invest in AI-ready hardware will be better positioned to compete with their competitors. More importantly, they can use AI to transform how they run their facilities.

Making the Right Hardware Choices for Your Data Center

The enterprise hardware space is going through a renaissance, including when it comes to the NVIDIA AI enterprise platform.

Vendors are investing heavily in making equipment suitable for supporting AI applications at every level. For any enterprise with hardware on-site or with a colocation provider, the subsequent few refresh cycles will call for some critical decisions that, in turn, will impact business in the long run.

Choosing the right vendor and equipment is an important decision that must be made according to business goals and budgetary requirements. PivIT, as a hardware procurement specialist, has enabled many businesses to acquire the equipment they need to advance their infrastructure without crossing their budgets.

If you want to upgrade your infrastructure and prepare it for AI with NVIDIA GPU-powered servers, PivIT can help you find the latest equipment.